I recently authored a course with my good friend Allyson Burk for the uTest University on Exploratory testing. This is a heavily viewed course because it’s part of the “Getting Started at uTest” course track which most new uTesters work through. Since this course is a foundational piece of new uTester’s development, I spent a large amount of time researching and editing to make this as accurate and consumable as possible. So far it’s been well-received earning a 5-star average rating – Not too shabby.

https://university.utest.com/what-is-exploratory-testing/

It even got front-page billing on the University home page:

Below is the content of the course:

INTRODUCTION

Today’s development world is much different than it was 10 or even 5 years ago. Product development is fast-paced and must meet the high expectations of users. As development practices have changed, so too have approaches to testing. Many testers are finding that Exploratory Testing (ET) is an effective way to test in these circumstances. The adoption and use of ET has rapidly grown, to the point where it is arguably the most popular testing approach used today.

In this course we’ll start by learning what ET is and how it differs from scripted testing (ST). Then we’ll look at why you should use ET and finally, we’ll wrap up by showing you how to get started. So let’s make like Magellan and start exploring!

THE ‘TRADITIONAL’ APPROACH TO TESTING

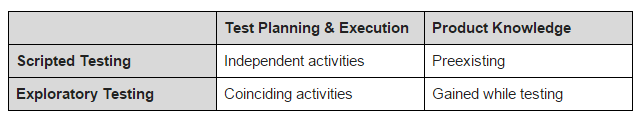

Before we look at ET, it might help if we first talk about a different, more traditional approach to testing so we can use that as a reference point to make some comparisons.

Scripted Testing (ST) is a two-step approach to testing. First the tests are written; they are planned, designed and documented. Second, the tests are executed. These two activities are done independently of each other and in many cases, the person who writes the tests is different than the person who executes them.

Generally, the tester executing the tests has some knowledge of the product, or the tests include the information needed to execute them. This is important because without that knowledge or information, the tester might not be able to execute the test or interpret its results.

WHAT IS EXPLORATORY TESTING?

Now lets compare that with ET which is simultaneous learning, test design and test execution. In other words, the tester is designing his or her tests and executing them at the same time. As an exploratory tester, your next action (the next test) is influenced by your previous actions, your observations of the product’s behavior, and your own thought process.

ET also assumes that a significant portion of the testing will be spent learning about the product. As you explore, you become more aware of how the product functions and its expected behavior. You can use that knowledge to design new and better tests. It also helps improve the analysis of the test’s results.

It is important to make the distinction between ET and other types of unscripted testing because some testers mistakenly believe that all unscripted testing is simply poking the product randomly to see what happens. Performing a series of random actions is called monkey testing and in some cases it may be a valid approach, however this is quite different from ET. With ET actions are the opposite of random — they are deliberate, driven by human thought and reasoning. Your approach is continually refined as new information is gathered and analyzed.

When an explorer goes to an uncharted region of the world, they spend months preparing. They go with a goal in mind and they rely on their abilities to adapt to changing situations. Similarly, an exploratory tester must prepare. They too have a goal and the skills needed to adjust their course. It’s true that monkey testing may occasionally find useful information, but it’s found unexpectedly. It’s the difference between discovery and exploration; luck versus skill.

WHY DO EXPLORATORY TESTING?

One of the most defining qualities of humans is our ability to think. It’s what makes us unique. It allows us to analyze a situation and make decisions, or come up with new ideas, or find new solutions to a problem. We have the capability to learn and continuously improve.

Henry Ford once said “If you always do what you’ve always done, you’ll always get what you’ve always got.” Executing scripted tests over and over will generally produce the same results. Exploratory testers use their cognitive abilities to continually improve the value of their work. They explore and adapt, they learn and adjust. ET is designed to make the most of our intellectual abilities.

ET also takes advantage of the differences between testers. Each tester’s previous experience, skills, and thought process (among other things) causes him or her to view thing in a unique way. Different testers may come up with different ways to test the same function. It may be more beneficial to have three testers test the same function in three unique ways than it is to have all three test it in exactly same way.

In many cases we need to use ET because there is no other alternative. For example, consider a situation where the tester isn’t familiar with the product under test and scripted tests are not available. In this case, it’s up to the tester to study the product, design and execute their tests. As we’ve already seen, this is the very definition of ET.

It can take a significant time to read, comprehend, and execute each step in a test case. This is especially true if you don’t already know the product, or the TC uses language or terms you’re not familiar with. When a tester is tasked to find bugs quickly, they need to be searching for bugs, not reading test cases. They need the freedom to follow promising leads, not the constraints of predefined instructions.

HOW DO I GET STARTED?

If there is one thing all new testers (including new exploratory testers) should do, it’s to start by thinking about the product in general terms; try to see the big picture. Instead of initially focusing on one specific thing, first try to understand the context in which you are working.

Some questions to consider are:

- Is this a product in development or is it already in production?

- What is the purpose of the product?

- Who are the users and how are they going to use it?

Jumping right in and banging on things might produce a bug or too, but if you hope to get the most out of ET, initial preparation and understanding your context is vital.

Now lets see how ET might look in practice. Imagine you’re a brand new tester, your boss comes to you and on your first day and says, “Here you go, this is the latest version of our app. Please begin testing and report any bugs you find.” There are no test cases and no documentation. What do you do? An exploratory tester would do something like this:

1. Get a notebook (or a digital word processor) to take notes as you go.

2. Explore the app as if you just downloaded it and want to use it yourself. If it is not an app you would typically use, then imagine you are the target market for the app.

Take a moment to really get in the mindset of a typical user. Some questions you can ask yourself are what is the goal of this app? Who would benefit from that? How do they benefit?

Let’s say this is an app to show up-to-date stock market information.

Goal of the app: Having stock market data at your fingertips.

Who benefits: Someone who is financially savvy or wants to be and has available income to be investing or has interest in other people’s investments.

How do they benefit: They benefit by the data being timely, accurate, easily accessible and displayed in a way that they can understand quickly.

Don’t worry about finding any bugs right now. You may stumble on them, but this is really just getting used to the app. Jot down anything you find that you want to explore further later.

3. Once you get a feel for the app start going back to the areas that interested you and you thought might be a place of vulnerability in the app. This knowledge about vulnerability is going to come with experience. Don’t worry if you don’t have any experience yet because you are about to get some!

4. One by one, work through each area you’ve earlier identified, exploring every function in that area. Think of what a real user might do. Come up with with some use cases or scenarios and execute those. Then think of variations and execute those. Use the results of your tests to help you come up with new ideas.

5. Focus on one bug at a time, but always be on the lookout for hints of other bugs or suspicious areas. In your notebook, quickly make a note of these areas and how to get back to them. This way you can come back and explore each one later. You could very well end up with 4 or 5 bugs just from investigating the initial bug.

6. Once you’ve exhausted that area or function of the app, move on to your next point of interest. As you repeat this process, remember what you’ve learned so far and use that information to influence your tests.

As you can see from this narrative, you are simultaneously learning, designing tests, and executing the tests. These are the core pieces of ET. Understand this and you’re on your way to becoming an exploratory tester.

CONCLUSION

Different testing needs call for different testing approaches and there are many situations where ET can prove most beneficial. ET is the inverse of scripted testing because it relies on human intellect as opposed to simply following instructions. ET is a process of continual refinement and improvement where testers adapt to situations and the information they’ve gathered. Now that you’ve been introduced to ET, our hope is that will continue to explore exploratory testing and that you can use these skills to provide the most value possible.