I recently wrote a post for the uTest blog about my certification story. It was well received earning a good number of comments and social shares.

http://blog.utest.com/2014/08/04/certified-and-proud-a-testers-journey-part-i/

http://blog.utest.com/2014/08/05/certified-and-proud-a-testers-journey-part-ii/

A Gold-rated tester and Enterprise Test Team Lead (TTL) at uTest, Lucas Dargis has been an invaluable fixture in the uTest Community for 2 1/2 years, mentoring hundreds of testers and championing them to become better testers. As a software consultant, Lucas has also led the testing efforts of mission-critical and flagship projects for several global companies.

Here, 2013 uTester of the Year Lucas Dargis here shares his journey on becoming ISTQB-certified, and also tackles some of the controversy surrounding certifications.

In case you missed it, testing certification is somewhat of a polarizing topic. Sorry for stating the obvious, but I needed a good hook and that’s the best I could come up with. What follows is the story of my journey to ISTQB certification, and how and why I pursued it in the first place. My reasons and what I learned might surprise you, so read on and be amazed!

Certifications are evil

Early in my testing career, I was a sponge for information. I indiscriminately absorbed every piece of testing knowledge I could get my hands on. I guess that makes sense for a new tester — I didn’t know much, so I didn’t know what to believe and what to be suspicious of. I also didn’t have much foundational knowledge with which to form my own opinions.

As you might expect, one of the first things I did was look into training and certifications. I quickly found that the pervasive opinion towards certifications (at least the opinion of thought leaders I was learning from) was that they were at best a waste of time, and at worst, a dangerous detriment to the testing industry.

In typical ignoramus (It’s a word, I looked it up) fashion, I embraced the views of my industry leaders as my own, even though I didn’t really understand them. Anytime someone would have something positive to say about certification, I’d recite all the anti-certification talking points I’d learned as if I was an expert on the topic. “You’re an idiot” and “I’d never hire a certified tester” were phrases I uttered more than once.

A moment of clarity

Then one fine day, I was having a heated political debate with one of my friends (I should clarify…ex-friend). We had conflicting views on the topic of hula hoop subsidies. He could repeat the points the talking heads on TV made, but when I challenged him, asking prodding questions trying to get him to express his own unique ideas, he just went around in circles (see what I did there?).

Like so many other seemingly politically savvy people, his views and opinions were formed for him by his party leaders. He had no experience or expertise in the area we were debating, but he sure acted like the ultimate authority. Suddenly, it dawned on me that despite my obviously superior hip-swiveling knowledge, I wasn’t that much different from him. My views on certifications and the reasons behind those views came from someone else.

As a tester, I pride myself on my ability to question everything, and to look at situations objectively in order to come to a useful and informative conclusion. But when it came to certifications, I was adopting the opinions of others and acting like I was an expert in an area I knew nothing about. After that brief moment of clarity, I realized how foolish I’d been, and felt quite embarrassed. I needed to find the truth about certifications myself.

Now let me pause here for a second to clarify that I’m not saying everyone should go take a certification test so they can have first-hand experience. I think we all agree that it’s prudent to learn from the experience of others. For example, not all of us have put our hand in a fire, but we all know that if you do, it will get burned.

It’s perfectly fine to let other people influence your opinions as long as you are honest about the source of those opinions. For example, say:

Testers I respect have said that testing certificates are potentially dangerous and a waste of time. Their views make sense to me, so it’s my opinion that testers should look for other ways to improve and demonstrate their testing abilities.

However, if you’re going to act like an expert who has all the answers, you should probably be an expert who has all the answers.

Studying for the exam

I took my preparation pretty seriously. Most of my studying material came from this book which I read several times. I spent a lot of time reading the syllabus, taking practice exams and downloading a few study apps on my phone. I also looked through all the information on the ISTQB site, learning all I could about the organization and the exam.

Since I knew all the anti-certification rhetoric about how this test simply measures your ability to memorize, I memorized all the key points from the syllabus (such as the 7 testing principles). But I also took my studying further, making sure I understood the concepts so I could answer the K3 and K4 (apply and analyze) questions.

Headed into the test, I felt quite confident that I was going to ace it.

Be sure check out the second part of Lucas’ story at the uTest Blog.

————————————————————————————————-

his is the second part of tester and uTest Enterprise Test Team Lead Lucas Dargis’ journey on becoming ISTQB-certified. Be sure to check out Part One from

yesterday.

The test

After about 3 minutes, I realized just how ridiculous the test was. Some of the questions were so obvious it was insulting, some were so irrelevant they were infuriating, and others were so ambiguous all you could do was guess.

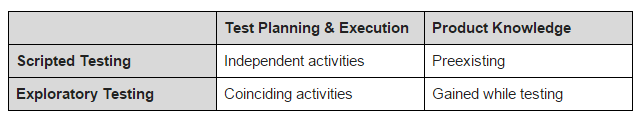

Interestingly, testers with experience in context-driven testing will actually be at a disadvantage on this test. When you understand that the context of a question influences the answer, you realize that many of the questions couldn’t possibly have only one correct answer, because no context was specified.

You are allotted 60 minutes to complete the test, but I was done and out of the building in 27 minutes. That I finished quickly wasn’t because I knew all the answers — it was rather the exact opposite. Most of the questions were so silly, that all I could do was select answers randomly. Here are two examples:

“Who should lead a walkthrough review?” – Really? I was expected to memorize all the participants of all the different types of meetings, most of which I’ve never seen any team actually utilize?

“Test cases are designed during which testing phase?” – Umm…new tests and test cases should be identified and designed at all phases of the project as things change and your understanding develops.

According to the syllabus, there are “right” answers to all the questions, but most thinking testers, those not bound by the rigidness of “best practices,” will struggle because you know there is no right answer.

Despite guessing on many questions, I ended up passing the exam, but that really wasn’t a surprise. The test only requires a 65% to pass, so a person could probably pass with minimal preparation, simply making educated guesses. I left the test in a pretty grumpy mood.

The aftermath

For the next few days, I was annoyed. I felt like I had completely wasted my time. But then I started thinking about why I took the test in the first place, and what that certification really meant. As a tester striving to become an expert, I wanted to know for myself what certifications were about. Well — now I have first-hand experience with the process. I’m able to talk about certifications more intelligently because my opinions and views about them are my own, not borrowed from others.

Former tennis great Arthur Ashe once said, “Success is a journey, not a destination. The doing is often more important than the outcome.” I think this sums up certifications well. For me, there was no value in the destination (passing the exam). I’m not proud of it, and I don’t think it adds to or detracts from my value as a tester. However, I felt there was value in the journey.

Through my studying, I actually did learn a thing our two about testing. I was able to build some structure around the basic testing concepts. I learned some new testing terms that I have used to help explain concepts such as tester independence. Studying gave me practice analyzing and questioning the “instructional” writing of others (some of which I found inaccurate, misleading or simply worthless). The whole process gave me insight into what is being taught, which helps me better understand why some testers and test managers believe and behave the way they do.

But more importantly, I became aware of the way I formulate opinions and of how susceptible I am to the teachings of confident and powerful people.

The lessons I learned from testing leaders early in my career freed me from the constraints of the traditional ways of thinking about testing, but in return, I took on the binds associated with more modern testing thinking. Ultimately, I came to a conclusion similar to that of many wise testers before me, but I did so on my terms, in a way that I’m satisfied with.

One day, I hope to have a conversation with some of the more boisterous certification opponents and say, “I decided to get certified, in part, because you were so adamantly against it. I don’t do it out of defiance, but rather out of a quest for deeper understanding.”

If you made it this far, I thank you for sharing this journey with me. Make a mention that you finished the story in the Comments below, and I’ll send you a pony.

e, beer and sprits. Yeah, that sounds better.

e, beer and sprits. Yeah, that sounds better.